Since few days, there is a hot topic in the graphics cards community: the VRAM limitations of the GeForce GTX 970. To make the story short (see links at the end of the article), the GTX 970 comes with 4GB of GDDR5 memory. Some GTX 970 owners have noticed that the VRAM allocation is limited to 3.5GB even if the board has 4GB of VRAM. The games they played couldn’t allocate all the 4GB for game resources.

Of course, major review sites contacted NVIDIA and here is NVIDIA’s answer:

The GeForce GTX 970 is equipped with 4GB of dedicated graphics memory. However the 970 has a different configuration of SMs than the 980, and fewer crossbar resources to the memory system. To optimally manage memory traffic in this configuration, we segment graphics memory into a 3.5GB section and a 0.5GB section. The GPU has higher priority access to the 3.5GB section. When a game needs less than 3.5GB of video memory per draw command then it will only access the first partition, and 3rd party applications that measure memory usage will report 3.5GB of memory in use on GTX 970, but may report more for GTX 980 if there is more memory used by other commands. When a game requires more than 3.5GB of memory then we use both segments.

We understand there have been some questions about how the GTX 970 will perform when it accesses the 0.5GB memory segment. The best way to test that is to look at game performance. Compare a GTX 980 to a 970 on a game that uses less than 3.5GB. Then turn up the settings so the game needs more than 3.5GB and compare 980 and 970 performance again.

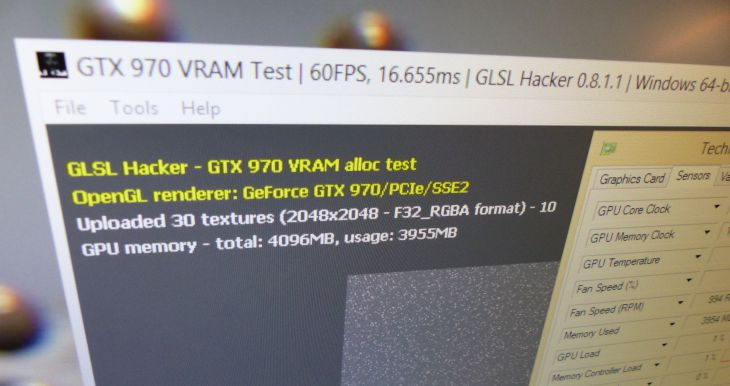

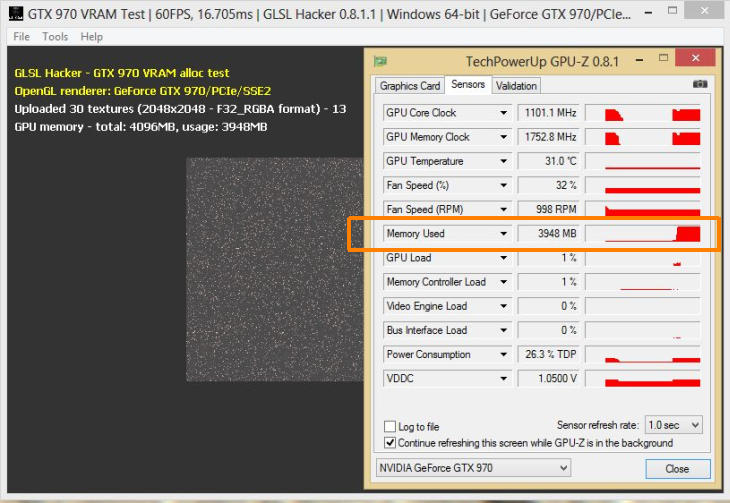

This morning I did a quick VRAM allocation test using GLSL Hacker in order to see this 3.5GB limit. I coded a small test that creates tons of textures to fill the graphics memory. This test is available in the host_api/Misc/GTX970-VRAM/ folder of the code sample pack.

And guess what? I didn’t see that 3.5GB limit. I could allocate 4GB of graphics memory to store all my dummy textures:

Hummmm….

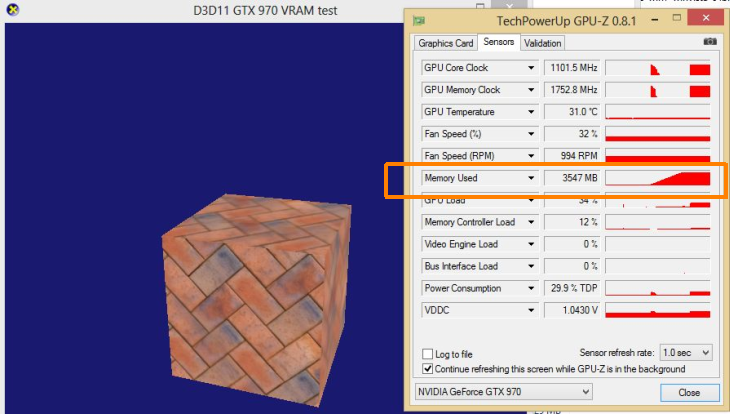

On this youtube video I found a Direct3D test that does the same thing: allocating a lot of textures. And this time I saw the limit:

To make you life easier, I uploaded this test as well as some missing DLLs (d3d compiler 47) on Geeks3D server:

[download#426#image]

According to that little test, it seems that OpenGL applications are not concerned by the VRAM allocation limitation of the GTX 970 (but they are certainly affected by the reduced speed of the 0.5GB memory that run at 1/7th of the speed of the 3.5GB memory pool). Most of the video games are Direct3D applications and that explains why many people have seen that issue.

Now the question: why OpenGL apps can allocate up to 4GB of memory while D3D apps are limited to 3.5GB? (and why the major review sites didn’t catch this issue in their detailed reviews ???)

Related Links

- gtx 970 vram,ROPs and L2Cache @ NVIDIA forum

- NVIDIA Discloses Full Memory Structure and Limitations of GTX 970 @ pcper.com

- NVIDIA Publishes Statement on GeForce GTX 970 Memory Allocation @ anandtech.com

- Nvidia explains the GeForce GTX 970’s memory ‘problems’

- GTX 970s can only use 3.5GB of 4GB VRAM issue @ overclock.net

It seems like it is not a limit but just that the driver tries to not exceed 3.5GB: if the driver can not make it with only 3.5GB it will use everything.

It may be that you just have forced the driver to use all of the memory because of the way you are using all the textures in every frame, but the D3D test was not so well made.

Every supported API can allocate all the available memory. The issue in the case is the performance hit caused by the segmentation and how the memory management by the driver works around it, not allocation.

Hi JeG, you have a typo in saying “memory that run at 17/th”.

While I’m on this point, 1/7th speed was attributed to Nai’s benchmark that showed 22.35GB/s. From my understanding this isn’t the case and slowdown is caused as stated by Nvidia by 1/8 VRAM (512MB) having disabled L2 cache and ROPs which is why main 3584MB proportion (7/8) is unaffected remaining at 224GB/s according to the data Nvidia provided.

Technically we should call it 224-bit (192GB/s bandwidth) as both 192b+32b polls are accessed the same time through the shared crossbar. Reviewers guide was wrong as Nvidia PR didn’t think it would be indivisible from the provided data at the time but it was, copy of specs shows what we all seen in reviews.

If it’s accessed at same time it could either be storing textures (always app dependant) or perhaps even used as cache at a certain point.

Thanks SMiThaYe for the typo, fixed!

I clearly found out the problem while ago while playing cod:advanced warfare with my msi 970 oc. When you choose max graphics setting, the game automatically reduces texture resolution to fit 3,5GB and not 4GB vram

Obvious thing to point out is that DX is game oriented.

So if that driver would allocate that 0,5GB then maybe performance would drop!

So driver try its best to avoid that.

Now. OpenGL was never so performance focused for Nvidia. Do not get me wrong. It have nothing to do with API. Its just that most gaming reviews happen on Windows on DX. So Nvidia have all the incentive to make DX fast (gamers/hobbyist market), while OpenGL is second class citizen (and more professional oriented – reliability over speed).

Major review sides didn’t catch it because they basically just extended nVidia PR that’s the sad state of techjourno on the internet

@DrBalthar … yep, that’s my take on these shenanigans by NVIDIA too. They might’ve gotten away with this guard-house BS years ago – when memory allocation was far more complex to control. But now? In 2014/15? Gtf outta here! Heaven knows they have the money & resources, made through extorting ca$h from its customers.. why hadn’t any of that moolah go into implementing memory allocation standards that, at least, meet the OpenGL standard?!? Are we expected to believe that hogwash press release from NVIDIA corporate? The fanboys can, but i sure as heck won’t.

@DrBalthar:

You can’t catch things like that, because you don’t see into chip (either by 3rd party SW or examination of HW) and have to rely on provided materials.

Techreport did notice some oddities and made follow up to their review exploring some oddities.

Note: IIRC NVidia control panel reportedly always showed correct values for L2/ROPs, but apparently nobody looks there…

===

To author of article: Don’t worry, it will come with future drivers to OpenGL too.

i just want to say that people who think 1080p gaming on ultra settings is possible with any game currently out on a 970 gtx are dead wrong ive played two games with huge stuttering problems but turn the textures down to medium and guess what buttery smooth test it out watch dogs and dying light.

I am sure, most future video card reviews will include a full VRAM bandwidth test. We live and learn. *Owner of 2 GTX 970’s in SLI.

OpenGL test fail to load on my Win7x64, with this error:

http://i.imgur.com/4yZqp7W.png