|

|

Virtual screens are a very nice feature of Mac OS X. I’m sure that GPU overclockers under Windows would love them.

Under Windows if you have two graphics cards, there is only one way to use both GPUs with OpenGL or Direct3D: NVIDIA SLI or AMD CrossFire. What’s more, SLI and CrossFire require nearly identical graphics cards. You can’t do SLI with a GTX 780 and a GTX 460, same thing for CrossFire: coupling a HD 7970 and a HD 5770 is not possible. And if you have a GeForce card and a Radeon card on the same motherboard, there is nothing to do. Under OS X, all these situations are not problematic thanks to virtual screens. A virtual screen is the combination of a renderer and a physical monitor. Under OS X, a renderer can be hardware (a GPU) or software (APPLE Software Renderer). |

Then if you have two graphics cards and two monitors, you have two virtual screens. The great thing with virtual screens is that an OpenGL application can run at full speed in a virtual screen.

With OS X, you can have several different graphics cards, for example a Radeon HD 7970 and a GeForce GTX 580. If you connect a monitor to each card, you will have two virtual screens.

You have nothing to do to manage virtual screens. All the work (rendering context management) is done by Apple in its OpenGL framework. Depending on the position on the screen of the OpenGL application, OS X dynamically uses the right OpenGL driver plugin (NVIDIA, AMD, Intel or software renderer) and implicitly updates the rendering context.

OS X OpenGL framework provides some functions to know the number of virtual screens, to get and set the current virtual screen (CGLGetVirtualScreen and CGLSetVirtualScreen). Setting the virtual screen can be useful in some cases. For example, a system with a GeForce GTX 280 (OpenGL 3) and a GeForce GTX 580 (OpenGL 4) can do tessellation (OSX 10.9 Mavericks) only in the virtual screen of the GTX 580. But thanks to virtual screens management, you can draw a tessellated scene in the virtual screen of the GTX 280 by forcing the use of the GTX 580 virtual screen. It’s like a remote rendering…

There is also a function to explicitly update the virtual screen rendering context called GCLUpdateContext. Its prototype is:

CGLError GCLUpdateContext(CGLContextObj ctx);

This function is not documented, maybe because of the implicit rendering context management.

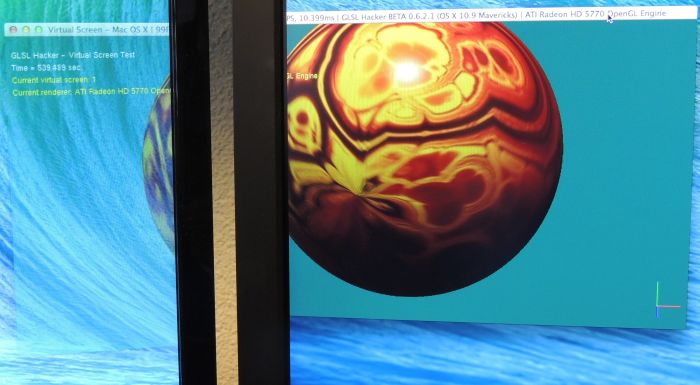

I updated the OS X version of GLSL Hacker with functions to deal with virtual screens. You can download the latest DEV version from this page. The version used for the article is GLSL Hacker 0.6.2.1. I did all my tests with OS X 10.9 Mavericks.

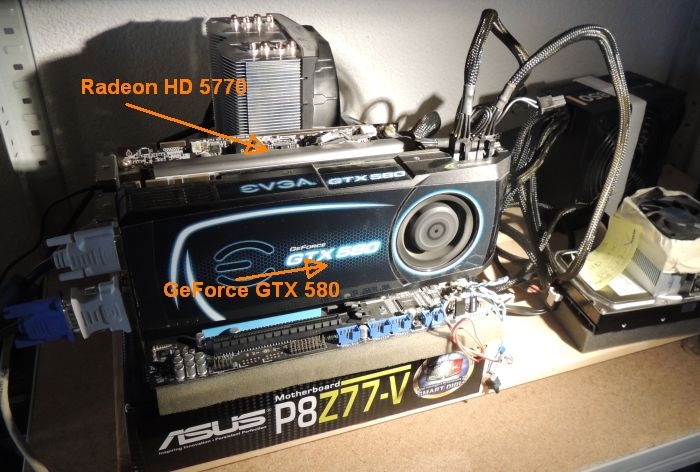

A new code sample is available in the Code Sample Pack in the moon3d/gl-210-virtual_screen_osx/ folder. This simple demo tracks the current virtual screen number and displays it. I tested the demo on my brand new hackintosh setup that has a Radeon HD 5770 + GeForce GTX 580.

Hackintosh, GeForce GTX 580 + Radeon HD 5770

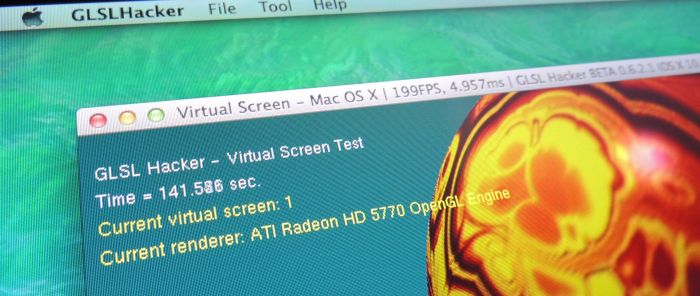

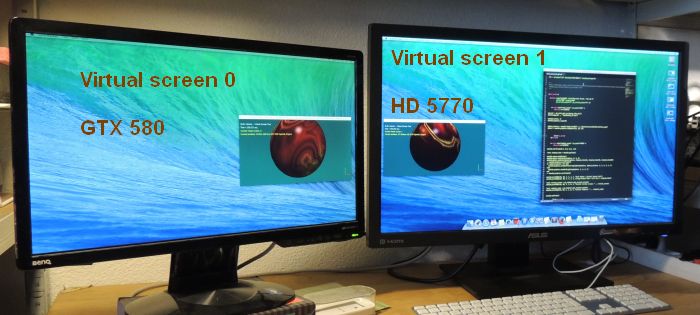

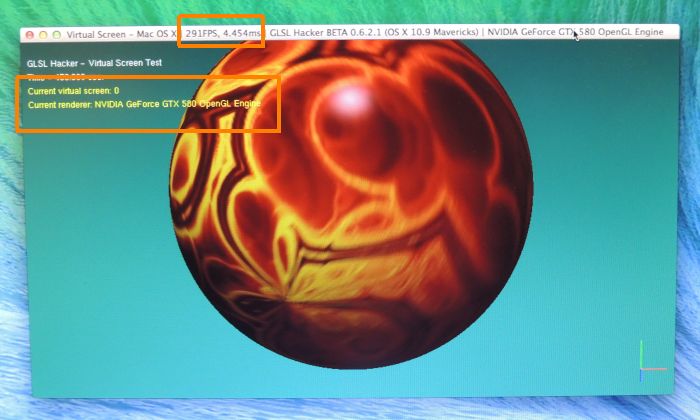

The virtual screen 0 is the GTX 580 and the virtual screen 1 is the HD 5770:

When a single instance of the demo is launched, it runs at 290FPS on the GTX 580 and at 190 FPS on the HD 5770.

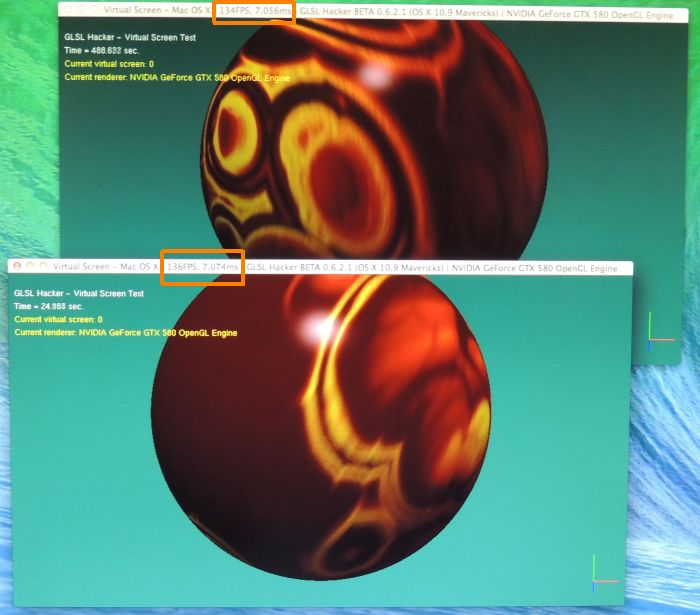

When two instances are launched on the GTX 580, the framerate is around 135 FPS:

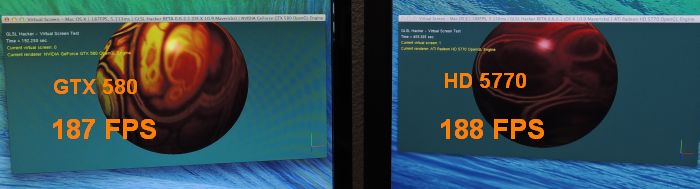

When each instance run in a virtual screen, the FPS goes up but it seems to be limited by the slowest graphics card:

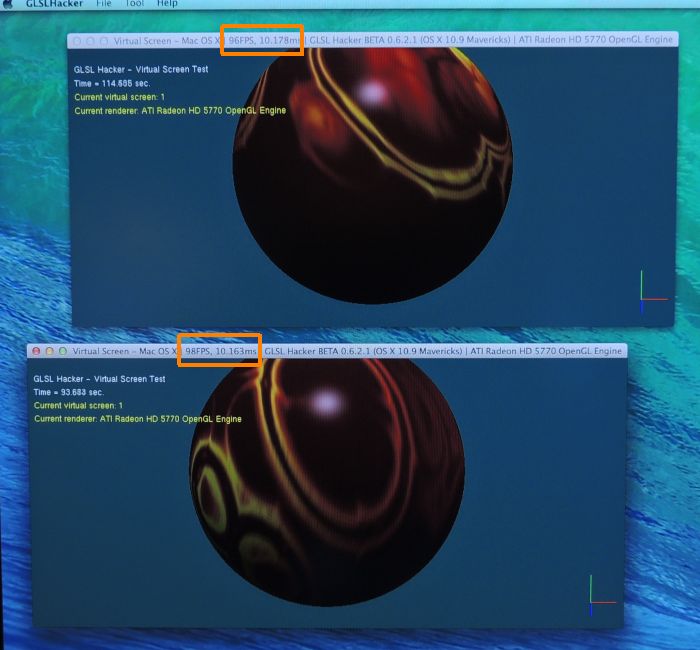

To confirm what I just said, I replaced the GTX 580 by a second HD 5770 (yeah, the power of hackintosh, I love it!). When two instances of the GLSL Hacker demo run in the same virtual screen, the framerate is 98 FPS:

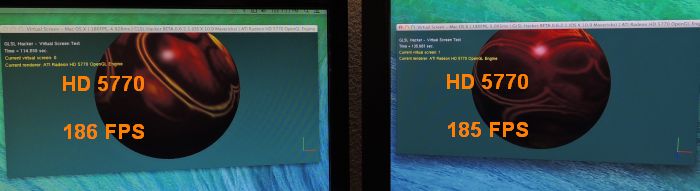

Now when each instance is running in a separate virtual screen, the framerate is close to the single instance one: 186 FPS.

When the graphics cards are identical, each instance of an OpenGL app can run at full speed in a virtual screen. When graphics cards are different, all OpenGL instances run at the framerate of the slowest GPU.

When you drag an OpenGL app from a monitor to the other one, OS X updates the virtual screen as soon as a virtual screen contains the majority of the pixels. In the following screenshots, I moved the GLSL Hacker demo from virtual screen 1 (Radeon HD 5770) to virtual screen 0 (GeForce GTX 580):

References

it seems you mess SLI\CF with multi-monitor capabilities. SLI\CF use two cards for rendering the same frame with AFR, accelerating games. Virtual Screen that’s like I connect two monitors or TV to the same videoboard.

Maybe I poorly expressed my idea: what are the solutions to use / stress several GPUs in OpenGL under Windows? SLI/CF is the only way I know. What I wanted to show is the way we can run an OpenGL code (an OpenGL app) on a particular GPU under OS X, which is not possible under Windows. OS X virtual screen can be seen as a multi-renderer capability rather than a multi-monitor cap.

On windows it’s possible with quadro cards and NV_gpu_affinity extension.