Article index:

- 1 – Rasterization pattern

- 2 – Number of Processed Vertices

- 3 – Number of Processed Fragments

- 4 – Bugs…

- 5 – References

4 – Bugs…

In the GLSL Hacker demos that comes with this article, I use two atomic counters: one for counting the processed vertices and the second for counting the processed fragments (the demos are available in the moon3d/gl-420-atomic_counters/ folder of the Code Sample Pack).

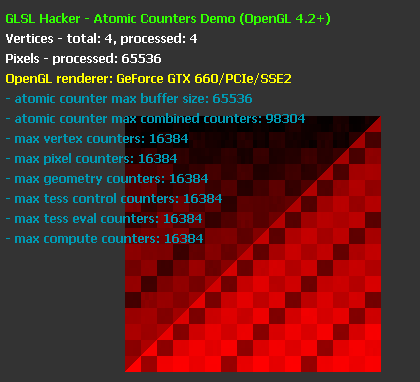

On NVIDIA GeForce cards, no problem, both atomic counters hold correct values. For the 256×256-pixels quad, we have 4 processed vertices and 65536 processed pixels:

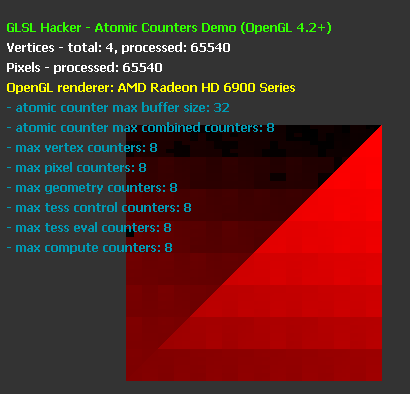

On AMD Radeon cards (tested with latest Catalyst 13.10 beta + HD 6970), only one atomic counter is took into account and all increments (with atomicCounterIncrement) go to this atomic counter. Result: 4 vertices + 65536 pixels = 65540:

I tested two ways: one atomic counter buffer with two elements or two atomic counter buffers with one element. In both cases, there’s a bug with AMD Radeon cards. GLSL Hacker code? AMD drivers?

5 – References

Here some useful links if you need more details about how to implement the support of atomic counters in your 3D engine.

- GL_ARB_shader_atomic_counters specification

- OpenGL Atomic Counters @ Lighthouse3d

- OpenGL cousre notes week6: Image Samplers and Atomic Operations

- gl-420-atomic-counter.cpp sample from the OpenGL Samples Pack @ G-Truc

Article index:

nice work

GeForce 6xx have pattern like Radeon 6xxx had, while GeForce 5xx had messy pattern. Interesting