Why? GLSL is not the same on ATI and NVIDIA cards? Yes of course, GLSL code is the same on both cards but the compilation of GLSL is where both vendors diverge: ATI has a strict GLSL compiler while NVIDIA has a laxer one. Moreover, NVIDIA GLSL compiler is based on Cg one and you can use Cg / HLSL types in GLSL like float3 in place of vec3…

I usually test all my GLSL shaders on both architectures but for my last tutorial about gamma correction I was a bit lazy and I only tested on my dev system (GeForce GTX 460). Verdict: users with a Radeon card are not able to run the demos.

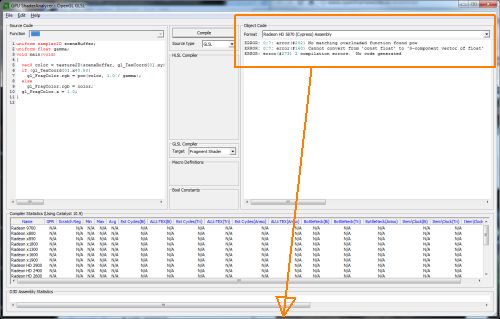

I was about to test my shader on my test bench with a Radeon HD 5870 but since I’m a big loafer I said to myself: wait a minute, I recently posted a news about a tool by AMD that allows to profile shaders for Radeon architecture. This tool is GPU ShaderAnalyzer.

I quickly copied the following pixel shader in GPU ShaderAnalyzer:

uniform sampler2D sceneBuffer;

uniform float gamma;

void main(void)

{

vec3 color = texture2D(sceneBuffer, gl_TexCoord[0].xy).rgb;

if (gl_TexCoord[0].x < 0.50)

gl_FragColor.rgb = pow(color, 1.0 / gamma);

else

gl_FragColor.rgb = color;

gl_FragColor.a = 1.0;

}

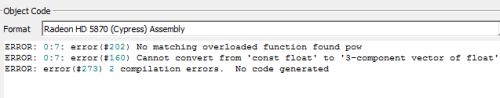

And here is what GPU ShaderAnalyzer told me:

The output of the GLSL compilation: error line 7

In not time, I found the error: line 7:

gl_FragColor.rgb = pow(color, 1.0 / gamma);

In this line, color is a vec3 while gamma is a float and there’s no a pow() function in GLSL that accepts a vec3 as first param and a float as second param. pow() syntax is:

genType pow (genType x, genType y)

As you can see, AMD GLSL shader compiler did a good job by pointing out the error.

The fix is simple:

gl_FragColor.rgb = pow(color, vec3(1.0 / gamma));

This code works now fine on NVIDIA and ATI cards.

GPU ShaderAnalyzer is an useful for developers with a NVIDIA card in their dev station. GPU ShaderAnalyzer is freely downloadable so there’s no reason to ignore it. And above all, always check your GLSL shaders on NVIDIA and AMD/ATI cards!

Nice Tip!

PS I can’t believe you didn’t know about GPU ShaderAnalyzer! I knew it many years 🙂

actually I know it since 2 years but I never thought to use it for validating Radeon shaders on a GeForce machine…

Nice one JeGX, thanks! Now the next step is to find out, how the tool’s validation part works 🙂

OK I’ll bite… or you could just buy ATi next time 🙂