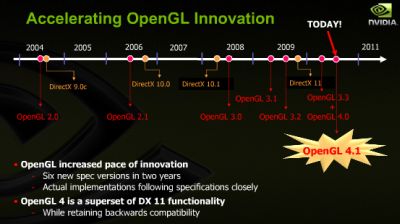

I think the following comment by OpenGL veteran Mark Kilgard (Principal system software engineer at NVIDIA) deserves a dedicated post because it’s a very interesting reading and demonstrates a certain superiority of OpenGL over Direct3D…

If you look more deeply it (OpenGL) is still behind (Direct3D)

MK: Really? What feature of DirectX 11 isn’t available with OpenGL 4.1 + CUDA or OpenCL? The only item you go on to mention isn’t a good example:

Because Direct3D11 SM5 allows to use some of the DXCompute datastructures in Pixelshader’s as well.

MK: NVIDIA’s OpenGL can inter-operate with buffers and textures for either CUDA or OpenCL or DirectX or even OptiX. DirectX 11 can only interop with DirectX buffers and surfaces. This seems like a situation where OpenGL is clearly MORE functional than Direct3D. You can look at the NV_gpu_program5, NV_shader_buffer_load, and ARB_texture_buffer_object extensions to see how an OpenGL shader can read, and yes, even STORE to buffers.

OpenGL has plenty of features not available in DirectX 11 such as line stipple, accumulation buffers, immediate mode, display lists, smooth lines, access to shaders through both high-level shading languages (Cg or GLSL) as well as assembly language (NV_gpu_program5, NV_gpu_program4, ARB_fragment_program, etc.). And this all comes with cross-operating system portability, something DirectX 11 will never offer.

For example, OpenGL 4.1′s entire feature set, including programmable geometry and tessellation shaders and all the other DirectX 10 and 11 features are available on Windows XP as well as Vista and Windows 7.

OpenGL 4.1 supports both OpenGL and Direct3D conventions. So in Direct3D, you are stuck with the Direct3D provoking vertex or shader window origin convention, but with OpenGL 4.1 you can now pick your convention.

NVIDIA OpenGL has lots of ways it surpasses DirectX 11 in functionality such as support for bindless graphics (NV_vertex_buffer_unified_memory & NV_shader_buffer_load), arbitrary texture format swizzling EXT_texture_swizzle), Serial Digital Interface (SDI) video support (NV_present_video) including video capture (NV_video_capture).

BTW, you can get the actual PowerPoint slides from here:

(This slides are corrected and slightly expanded from the recorded presentation. For example, they include code for implementing adaptive PN triangles.)

“And this all comes with cross-operating system portability, something DirectX 11 will never offer.”

Well, Apple’s OpenGL 3.x/4.x support is nonexistent at the moment.

Apple’s Direct3D support is also non-existent at the moment and most likely until the end of time 🙂

oh I knew I knew – NVidia is the best. but one question if we talk about crossplatform superiority – how many, for ex., linux people have NVidia OpenGL 4.1 capable videocards?, and how many programs that use OGL 4.1 they have?

So why does it matter if on a technical level its superior ? Still nobody uses it.

This is really a ridiculously daring comment.

Saying that OpenGL deprecated and propriatary features make OpenGL superset of Direct3D 11 is just the wrong message. On one side we try to educate developers not to use deprecated feature anymore because there are slow and even just pure-software and even have serious programming drawbacks and on other side a big guy at nVidia say that…

Also, maybe NV_shader_buffer_load is cross-operating system but it’s not standard. Who the fuck care about the assembly language anymore? Oo

OpenGL rocks but this is just nVidia bullshit.

OpenGL is not at the level of Direct3D 11 feature wise and probably development wise and even performance wise. This is easy to prove, launch even benchmark, look at the features set and the development tool. This said, OpenGL has made huge progress the past 2 years and eventually thanks to the efforts given by the ARB, OpenGL is going the catch up I think with in a year feature wise. For the performance and the tools, we will need more time. Nothing have been win yet but looking at the market it’s obvious that OpenGL is going to take more and more space in the next years which makes everyone works on the OpenGL ecosystem necessary and inevitable.

then i know why ATi lose game benchmark vs nVIDIA

coz nVIDIA have more OpenGL extension…

ha ha ha

more openGL extension more feature nVIDIA have

>_<!

Why OpenGL is Superior to Direct3D?

1. Portability.

2. You can use tessellation and geometry shaders under WinXP ( yaaaay! ).

3. It’s much more easy to maintain than DX, that forces you to rewrite a lot of code. With OpenGL you can just check for an extension and voilá.

Enough? I think yes.

to fool_gamer

but none of a real games use even ogl 3.0! Doom 3 engine uses ogl 2.0 only, and there’s Riddick Dark Athena which uses some extra extensions like EXT_framebuffer_object (WOW! it was 2008 and they’ve used it AT LAST) and AMD_texture4 (btw it’s disabled on my Radeon 4850) and that’s all. no one want to develop a serious opengl game except Carmack.

with OpenGL ES, i think alot of developers will be moving to openGL, i dont see DX available for the mobile phone market yet!

most devs will use DX as its easy for them to port over their xbox game to windows..but you want to support Linux…then you better go openGL!

their are plenty of crossplatform opengl apps available for linux and windows.. Second Life is one..which uses opengl3.2 if its available

OpenGL is far superior as it allows you to support non-Microsoft software and hardware, while still being compatable..

doesnt take a rocket scientist to work that out!

[quote]

no one want to develop a serious opengl app

[/quote]

And WoW for MacOSX…

And the zillion of Steam’s games for MacOSX…

And any CAD program like Maya or XSI for linux and MacOSX…

And all the mobile games using OpenGL|ES …

So yep, nobody uses it, CLEARLY….

… and FurMark … 😀

>> On one side we try to educate developers not to use deprecated feature anymore because there are slow and even just pure-software and even have serious programming drawbacks and on other side a big guy at nVidia say that…

To be clear, NVIDIA provides full OpenGL compatibility.

The whole “deprecation” concept is something promulgated by a collection of OpenGL hardware vendors who have failed for years to provide quality OpenGL implementations. Maybe if they break API compatibility, that will help them improve the OpenGL implementations. Basically, because they can’t figure out how to deliver a quality, performant OpenGL implementation, they want to break the API.

Claims you are naively repeating that certain features got marked “deprecated” because they are “slow” or “pure software” are simply ill-informed.

Immediate mode, wide points, wide lines, line stipple, polygon stipple, display lists, two-sided lighting, edge flags, accumulation buffers, and quadrilaterals, full polygon mode generality, the clamp texture mode, texture borders, automatic mipmap generation, alpha test [I could go on] ARE fully accelerated by NVIDIA hardware and drivers.

If you think it is a good use of your time to “avoid” these features because you think they are slow, I assure you they run at full speed and in hardware.

About the only features that truly were not in hardware are color index mode (optional anyway), selection/feedback, and surface evaluators (but needed to draw trimmed NURBS, and technically something NVIDIA’s Fermi could actually hardware accelerate today, ironic, huh?).

It baffles me how a few misguided vocal developers can get excited about “deprecation” as if it is some wonderful thing. “Please, please, remove features! I want you to hide features of my GPU from me!”

Here’s my suggestion instead. Spend your programming cycles on better shaders, tuning your app to not be so CPU bound, using the new texture compression formats when available, enabling programmable tessellation, etc. because these things will actually make your 3D app better. On the other hand, banishing every last remnant of immediate mode or eliminating GL_QUADS from your code base, is really just busy-work.

If you don’t want to use perfectly functional features of the API, good for you. But don’t spread misinformation and waste people’s time by claiming people have to be “educated” to not use these perfectly functioning, fast features.

I laugh at the phrase “even have serious programming drawbacks”. It sounds so vaguely sinister. Maybe you think glLineStipple spawns computer viruses, but it doesn’t.

The NVIDIA OpenGL driver actually has to add *extra* code to support deprecation simply to make sure you aren’t making calls that obviously could otherwise “just work”.

You’ll probably response “yeah, but if you remove all this stuff, the driver could be soo much faster.”

That’s naive because, like I said, lots of the deprecated stuff is IN HARDWARE, and a well written OpenGL driver has no measurable overhead (function pointers and just-in-time code generation are wonderful things…) attributable to features you aren’t actually using.

– Mark

OpenGL is more powerful in terms of what you can do with it.

However it still lacks two things (that Khronos is working on, so we should seem them comming in the next year or so)

– true multithreading

– direct state access

other than that, there isn’t much about OpenGL that’s behind DirectX.

Except Order Independent Transparency without EXT or proprietary extensions.

Hopefully OpenGL will improve and add all those things. I’m sure it will happen. Everybody should be using (when it does not sacrifice features) OpenGL because it’s cross-platform.

http://software.intel.com/en-us/articles/opengl-extensions-supported-in-intel-4-series-express-chipsets-and-beyond/

http://developer.apple.com/graphicsimaging/opengl/capabilities/

http://mesa3d.org/intro.html

Yes, NVIDIA and AMD provides modern OpenGL support, but supplier of near 70% of graphics cores – not. In consequence, as main contributor and client for Apple and Mesa OpenGL stacks, he is uninterested in excess of functionality in them. AMD GPU-CPU integration initiative will end the same.

Intel by years sold outdated cores until Microsoft set a limbo pole, yes, using DirectX. Apple and Linux-community currently seems impotent in this task.

Remember advanced 3D-sound technologies, like EAX and A2D? NVIDIA may become the only GPU player interested in edge graphic technologies, and will be welcome in Creative-like “death camp”.

@Mark

I can’t disagreed more with you. Using glBegin is freaking slow and any experienced OpenGL developer know that. I can’t see any good use of the accumulation buffer which is a software features too.

I remember a developer telling, “I want to buy a Fermi card to make my software run faster”, he is doing entirely his rendering with glBegin ! Simply with VBOs I bet I make his software run faster on a G92.

The deprecation profile is great and I love it be cause for the first time we have a chance to say to developers “look it’s not recommended to use this by the ARB”.

Until the release of the Superbible V5 the main OpenGL documentation was the Redbook, the “official guide”. This book remains the main source of learning OpenGL for everyone and developer start to learn glBegin and programing methods from the past decade. How legitimate it is for any experienced developers to say don’t use it in this condition? I say the worse and the slowest OpenGL code of my life written by the the best programmer I met. It tooks 6 months to make that code efficient because that programmer didn’t had much consideration for OpenGL, his consideration where on his software design which perfectly embraced the glBegin approach… too bad. Brilliant code, brilliant design but it doesn’t feet with the GPU.

Most application where OpenGL is used and will be used in the near future are not game but software where OpenGL is not the critical point which involves developers to use it without much concerns about how what it does. Let’s be honest about that, this is what we all do most of the time. I don’t have much concern about how my XML parser works, ok SAX, DOM but will I benchmark all the functions I am using? No. Same more my sound library, window library, etc…

With a board range of developers it’s important to bring some clear and simple guide lines and that why I think OpenGL ES 2 has been chosen over OpenGL 3.X. It’s not complicated to use shader or vertex buffer.

I am saying that it is required for a developer to be pedantic about not using deprecated feature, what I am saying is that the duty of the ARB to give guide lines to write effective OpenGL code. I agree that rewriting code using deprecated features which is not critical is a waste of time but writing new code using deprecated features is a waste of time as well.

“You’ll probably response “yeah, but if you remove all this stuff, the driver could be soo much faster.”” Seriously, I don’t know from where you get this idea… I don’t know any OpenGL developers that has this is in mind. This is just the opposite extreme of “use accumulation buffers, it’s fast!”

This is just for the deprecation model as you have a strong opinion about it but features wise there is quite some missing thinks: all the load and store buffers and images (RWBuffer and RWTexture in D3D), a effective multithreading rendering as well, the UAV counters.

Finally, I agree with JFFulcrum, nVidia is not alone and that would be the last thing to wish!

Mark,

but don’t you think that removing some of these features will also make HARDWARE faster?

I’m all for OpenGL because I’m using non-Microsoft platforms, too, but I believe that the situation where you have to provide different code paths for some vendor/driver combinations is not the way to go. NVIDIA is great and I’m using it, but I want my program to run on ATI (and even Intel) cards, too.

Like you, I also want vendors to provide quality OpenGL implementations. But if they have reasonable arguments that they cannot do this with current feature set, then “breaking the API” is not that bad idea.

OpenGL API was designed with different goals (and for different hardware) than we currently have. It needs to be more flexible yet less fragmented to regain the market share, and luckily things go in that direction, albeit somewhat slowly.

@RCL

It won’t makes hardware faster, the only think it could do is save a little bit of transistors, meaning of area space. However, this little bit of area space is likely to be insignificant.

A whole rasterizer is less than 1mm square on a die which is a good example for why Larabee fails. If any specific hardware is required for support some deprecated features the wait it is supported currently, it’s just a few registers, less than 1nm square on the die?

1.) NVIDIA OpenGL != OpenGL. Actually Direct3D has progressed far better in standarising features across hardware vendors than OpenGL ever managed.

2.) ACCUM Buffer? Isn’t correctly hardware accelerated in lots and lots of implementations

3.) Line stipple same

4.) Display lists are crap and slow

5.) Intermediate mode is crap and slow

Mark Kilgard has reduced himself to nVidia PR marketing. Poor performance. Epic failure.

“The whole “deprecation” concept is something promulgated by a collection of OpenGL hardware vendors who have failed for years to provide quality OpenGL implementations.”

Like there are so many vendors out there… Anyway, this is just wtf. I can’t believe that really Mark Kilgard said this bs. o_O

I understand that nVidia has no intention to break backward compatibility, so they leave the support for old crap techniques in their drivers (which is the right thing to do imo!), but that above statement… omg man.

I’m kinda shocked.

@DrBalthar

1.) NVIDIA OpenGL != OpenGL

Aggred

2.) ACCUM Buffer?

No hardware accelerate it!

4.) Display lists are crap and slow

I disagree with you, it remains a great feature for performance but it’s design is problematic and became really hard to use now.

@DrBalthar:

Regarding 1) That is because DX is in the strong hands of a single company, while OpenGL is like a cart pulled by many horses that are trying to go into different directions at the same time. That’s normal i guess.

The only solution in my opinion would be to get rid of “branded” extensions like NV_* for good and don’t even publish these in official extension registry unless they are supported by at least the two major vendors and thus, they are renamed to ARB_* or EXT_*. This would scare off developers from using vendor-specific extensions for good and eventually vendors would give up pushing these into our faces. Now that we’re at it…

Did anyone, ever seriously used a vendor-specific extension for other than a tech demo/test/fun project?

@Groovounent

As far as I remember ACCUM buffer used to be hardware-accel on some expensive SGI workstations back in the days using infinite reality architecture.

@DrBalthar

Lol, fair enough!

Agree with Mark. Breaking backward compatiblity is a bad idea. 3d engine writers are want to render polygons, and not to calculate quantumphisics on a gpu.

Also, breaking backward compatibility will NOT fix vendor’s problems with specifications, becouse the missing features is the newer features, not the old ones. If a vendor is unable to make quads to work, or unable to implement display lists, i suggest them to CULIVATE POTATOES instead of manufacturing graphics cards. Serious. I know. I am alreday programmed it, i am the creator of TitaniumGL opengl drivers, and programming display list support was take 2 days. Programming the WHOLE intermediate mode was take around 3+2+1 days. And these code is a from-scratch codes at all, no copypaste, no rely on dx or other librarys… Its just simply. But i hope intel, sis, and other third level vendors will not produce better drivers at all, becouse then the number of my users would decrase 😀

“NVIDIA OpenGL != OpenGL”

well none of nvidia samples from OpenGL SDK and CgToolkit work with my Radeon 4850. So I’m totally agree!

I believe in to many cooks spoil the broth both NV and ATI just need to support all of openGL and not have vendor specific gl extensions so that what works on one will work on the other… Both manufacturers need to just submit their ideas to Khronos group who then has the yay/nay final say and then both vendors have to fully support the extension

^ but guys from NVidia can’t! They’re using their own NV_ extensions if they want to develop the applications which are not compatible with ATI hardware. NV OpenGL SDK is great example (btw NV DXSDK works just FINE with ATI hardware)

It’s ok to deprecate (and hopefully later fully remove) stuff. But just give us a standardized replacement layer, which provides the same ease of glBegin&co for drawing simple things. We still need this, e.g. in interactive apps for drawing UI elements etc…

How about installation? I’ve counted: I’ve gone down 11 forked failure paths of trying to get OpenGL installed and and a test program working for *either* VC++ 2010 Express OR the latest gcc (with the latest Eclipse as IDE, but that’s beside the point.)

Anyone have a link for a 12th fork I can cross my fingers and try, as hope springs eternal?

Alls I wants ta do is have a nice little 3D programmatic interface so I can eff around with some ideas on my own.